JavaScript is everywhere from modern web apps to dynamic e-commerce stores and interactive landing pages. It powers slick animations, real-time updates, and app-like experiences that engage users. But when it comes to SEO, JavaScript can be tricky. If search engines can’t crawl or index your content correctly, your rankings and visibility can take a serious hit.

That’s where this guide comes in.

Table of Contents:

- What is JavaScript SEO?

- How Search Engines Handle JavaScript

- Why JavaScript SEO is Important

- Top JavaScript SEO Best Practices

- JavaScript SEO FAQs

- Common JavaScript SEO Pitfalls to Avoid

- How to Measure JavaScript SEO Success

- Advanced JavaScript SEO Strategies

- Recommended JavaScript SEO Tools

- Final Thoughts and Call to Action

1. What is JavaScript SEO?

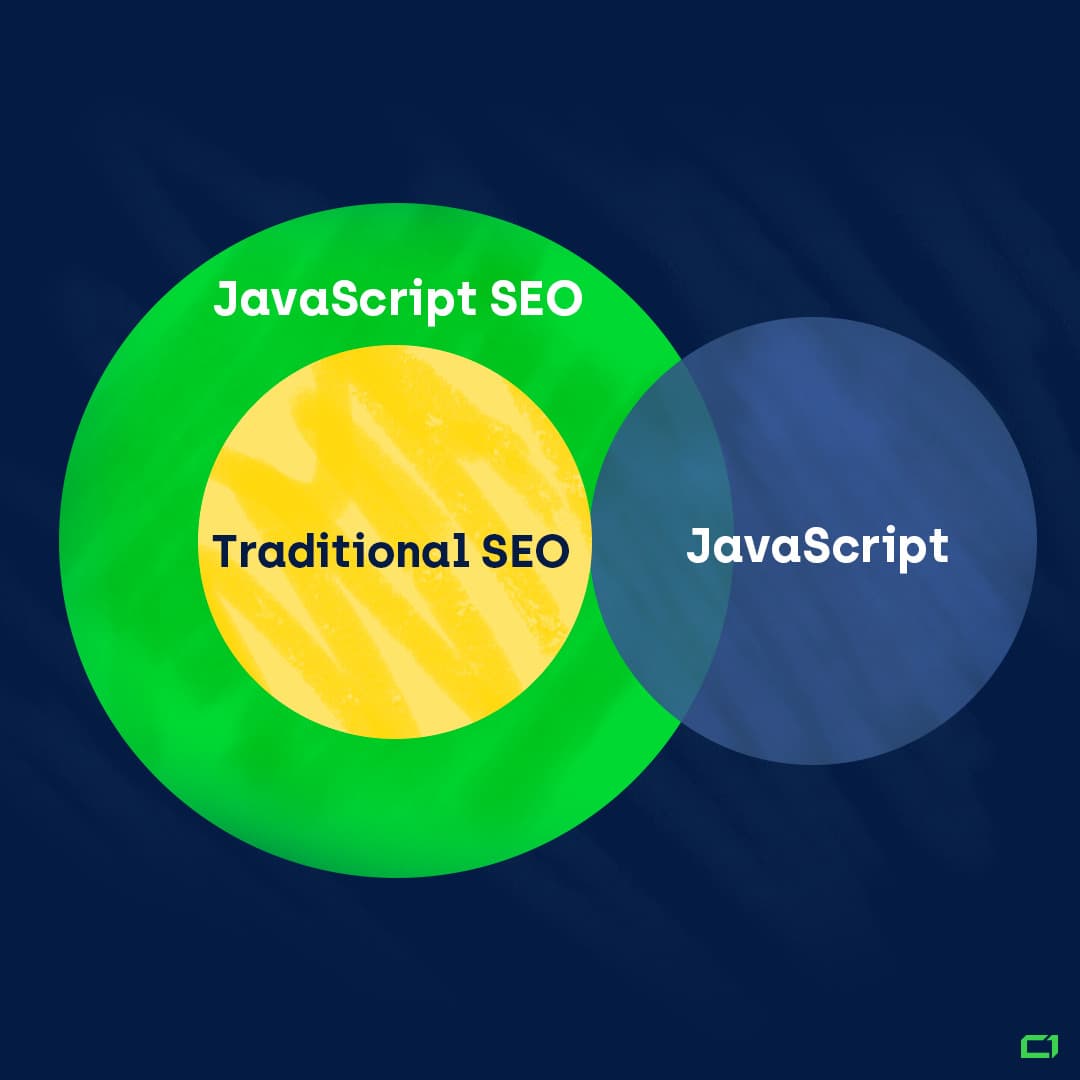

JavaScript and SEO refers to optimizing websites built with or heavily relying on JavaScript to remain fully accessible, crawlable, and indexable by search engines. It sits at the intersection of frontend development and search engine optimization, ensuring that dynamic, interactive, and app-like web experiences don’t come at the cost of visibility in search engine results pages (SERPs).

- Static HTML: Content is visible in the source code immediately.

- JavaScript-rendered content: Content appears only after scripts execute and build the page.

- Your product won’t appear in search results.

- Your content won’t help you rank for target keywords.

- You’ll miss out on organic traffic, leads, and sales.

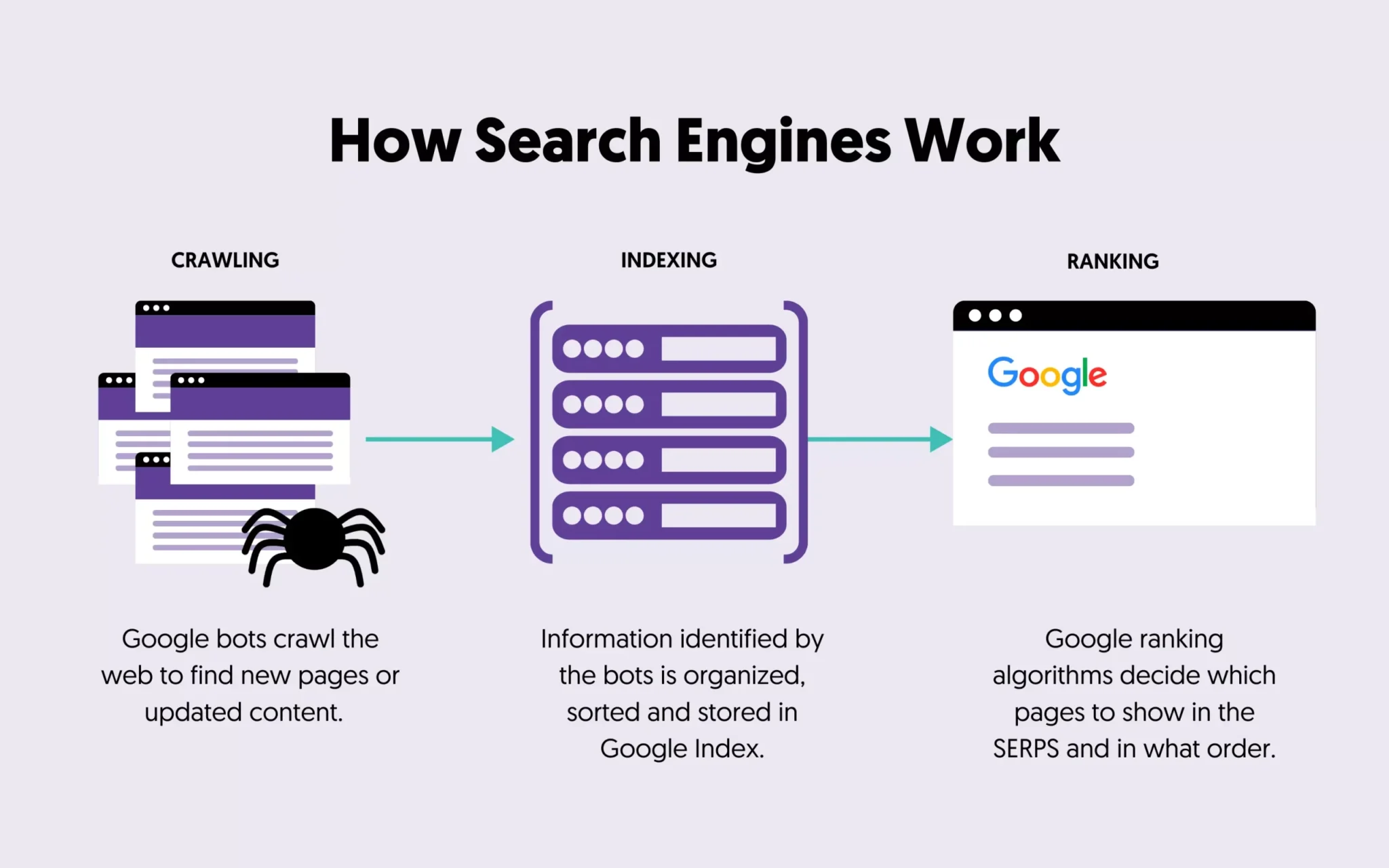

1. Crawling

Search engines use bots (spiders) to discover pages by following links. If your content only appears after a JavaScript interaction (e.g., clicking a button or scrolling), the crawler might never see it.

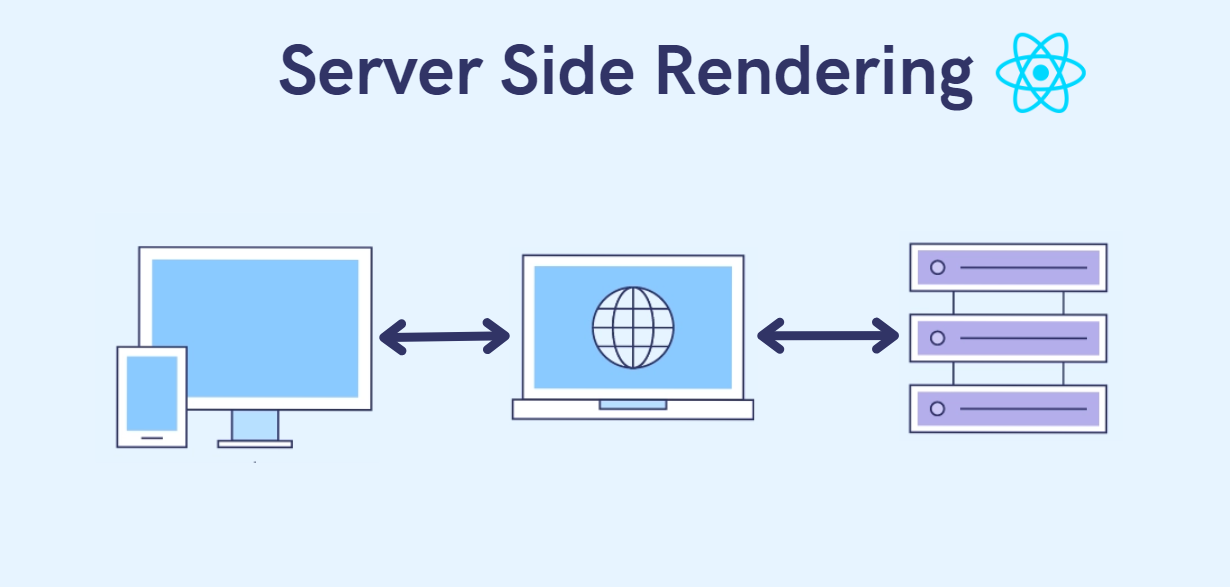

2. Rendering

Rendering refers to how the bot “builds” the page. This involves executing JavaScript and turning it into a visible layout with text, images, and interactions just like a browser would. Rendering is resource-intensive and slower than parsing raw HTML. Google uses a two-wave indexing model: it first indexes the basic HTML and then returns later to render the JavaScript-heavy content. This delay can impact when and whether your content gets fully indexed.

3. Indexing

Once the page is rendered, search engines evaluate the content to determine its relevance and store it in the search index. Even if your site is otherwise well-optimized, your page won’t rank as expected if the content is missing.

- Lower rankings

- Invisible or incomplete search listings

- Reduced click-through rates

- Loss of organic traffic

- E-commerce websites where product info, reviews, or pricing are dynamically loaded

- News/media websites using infinite scroll or dynamic loading of content

- Startups and SaaS platforms using SPA frameworks like React or Vue

- Mobile-first web experiences relying on interactive UIs

- Sites with rich web apps or dashboards built using JavaScript

- Crawlers quickly discover your pages.

- Your content is rendered and understood correctly.

- Your site maintains optimal visibility in organic search.

2. How Search Engines Handle JavaScript

Googlebot is currently the most advanced web crawler in handling JavaScript. But even Google has its limitations and its process isn’t as instantaneous or reliable as many developers think.

Google processes JavaScript-heavy pages in three distinct stages:

- Googlebot first discovers the page URL through sitemaps, internal links, backlinks, or other sources.

- At this point, it retrieves the raw HTML.

- If the HTML is primarily empty and relies heavily on JavaScript, Googlebot must render the page before it can understand the content.

- Google places the page into a rendering queue.

- It executes JavaScript using a headless version of Chrome, simulating how a real browser would load the page.

- This step is resource-intensive and doesn’t happen instantly sometimes it’s delayed by hours or even days.

- If resources (scripts, APIs, third-party files) fail to load or are blocked, content might not render.

- After rendering, Googlebot can finally “see” the page’s full content.

- If the content is meaningful and relevant, it gets stored in Google’s index.

- But if rendering fails or takes too long your page might never be appropriately indexed.

- Two-wave indexing: Google might index the static HTML quickly (first wave), but it won’t see your JavaScript content until rendering happens (second wave). This delay can hurt SEO performance, especially if time-sensitive content (like product launches or news updates) is involved.

- Content might be missed: If a critical element, like a product description or CTA, is loaded only after a user scrolls or interacts, Google might not see it unless special precautions are taken (like server-side rendering or prerendering).

Other Search Engines

Bing

- Bingbot has improved its ability to process JavaScript, especially after adopting Microsoft’s Edge rendering engine.

- However, it still lags behind Google in both speed and completeness of rendering.

- Bing’s documentation encourages web admins to keep core content in static HTML or use dynamic rendering.

Yahoo

- Bing powers Yahoo’s search results, so it inherits the same limitations.

- JavaScript-heavy content may be missed if Bing does not render it effectively.

Yandex

- Yandex has made some progress with JavaScript rendering, but it is still relatively limited compared to Western search engines.

- Server-side rendering or prerendering is strongly recommended for Russian-language sites targeting this market.

DuckDuckGo

- DuckDuckGo pulls data from over 400 sources, including Bing, so again, any weaknesses in Bing’s rendering pipeline will affect DuckDuckGo’s ability to index JavaScript content.

Baidu (China)

- Baidu struggles significantly with JavaScript.

- JavaScript should be minimized for content aimed at Chinese audiences, and server-side rendering is strongly advised.

3. Why JavaScript SEO is Important

- Higher Visibility: If bots can’t see it, it doesn’t exist at least not in search results.

- Faster Indexing: Proper rendering ensures your content is indexed quickly.

- More substantial Rankings: Speed, usability, and crawlability are all factors in ranking.

- Better UX = Better SEO: Clean, fast, interactive sites retain users and reduce bounce rates.

4. JavaScript SEO Best Practices

- Minify and bundle scripts

- Load asynchronously

- Defer non-critical JS

- Use lazy loading correctly

- Google Search Console – check how Google sees your site

- Lighthouse – performance and SEO scoring

- Chrome DevTools – debug rendering issues

5. FAQs: JavaScript and SEO

6. Common Pitfalls to Avoid

7. Measuring JavaScript SEO Success

- Google Search Console: See indexing, errors, and page performance

- Lighthouse: Technical audits

- Core Web Vitals: Measure UX signals like LCP, FID, CLS

- Ahrefs/Screaming Frog: Deep crawl analysis

- SERP Rankings: Monitor keyword positions

8. Advanced Strategies for JavaScript SEO

9. JavaScript SEO Tools and Resources

- Google Search Console – Crawl, index diagnostics

- Google Lighthouse – Audit performance and SEO

- Screaming Frog – JavaScript rendering crawls

- Rendertron / Puppeteer / Prerender.io – Dynamic rendering

- WebPageTest – Speed and rendering insights

- SEMRush / Ahrefs / Moz – Technical SEO and SERP tracking

- LogRocket / Sentry – Monitor frontend errors

10. Final Thoughts and CTA

6420 Richmond Ave., Ste 470

Houston, TX, USA

Phone: +1 832-850-4292

Email: info@excellofficial.com